Then the Lord God formed a man from the dust of the ground and breathed into his nostrils the breath of life, and the man became a living being-Genesis 2:7

The idea of creating artificial life has fascinated mankind for millennia. Across the ancient world tales abound of machine animals, birds, and men pieced together by human hands from inanimate materials. Even the Talmud describes holy men close to god birthing golems by breathing life into clay figures with Hebrew words. Far from simple clockwork constructs these creatures are said to have been imbued with complex and independent intelligence by their creators.

Though there is no evidence that any of these advanced and farfetched artificial creations ever existed, their lesser cousins most certainly did. In 1900 sponge divers discovered the remains of an ancient analogue computer off the coast of the island of Antikythera. Using a series of bronze cogs and gears this machine could accurately predict the future positions of the planets, the sun, and the moon in relations to earth as well the timing of lunar and solar eclipses.

This rudimentary machine intelligence encouraged the thought that the workings of the mind are purely physical and could be mechanically replicated. The idea of a metaphysical soul or intellect separate from the physical realm, however, denied the possibility of creating mechanical life. Opposing this was the theory that all thought could be rationalised and formalised, much like algebra or geometry. The possibility of creating an artificial thinking being was tied to the vying between these two schools of philosophical thought.

Man’s obsession with playing god ensured that the quest to fashion an artificial intelligence would never die. The technological revolution of the 20th century reinvigorated this quest as the advent of the computer promised a machine capable of replicating human thought. In 1871 Henry Pickering Bowditch proved that a heart’s contraction was a case of all-or-none, whereby the muscle responded completely or not at all. It was soon discovered that this was also how neurons worked. This meant that all brain activity was simply a circuit of neurons turning on and off in certain sequences.

If this was the case then an artificial intelligence could be constructed by replicating the circuitry of the human mind with a series of electrical gates that could similarly open and close. During the 1950s, ‘60s, and ‘70s the world was astonished as computers continued to make giant leaps forward in understanding and replicating human thought and logic. With the Cold War in full effect these projects attracted a great deal of government and military funding because of the potential they offered.

It soon became apparent, however, that AI’s early proponents had been overly optimistic and underestimated the enormity of the task before them.

In 1950 Alan Turing, one of the leading minds in the field of artificial intelligence, published a paper that included the now famous Turing Test. This test argued that a machine could be described as intelligent only if it exhibited behaviour indistinguishable from or equivalent to that of a human. Though computers were soon able to replicate and react to natural languages like English, solve complex problems, and play checkers and chess they were far from passing the Turing Test and by 1974 the enthusiastic funding began to dry up.

By the 1980s the early focus on creating shortcuts and methods whereby machines could learn or reach an end goal became supplanted by expert systems that solved problems in certain areas by using expert knowledge of these fields. This school of thought was based on the idea that intelligence was the ability to draw upon a large and diverse reservoir of knowledge to solve a myriad of problems. Artificial intelligence and a machine that could learn was, however, still an unattainable chalice and soon a second ‘AI winter’ set in.

The advent of the internet age and the increased importance of computers in technology and administration brought an end to this drought of funding in the 1990s. Since then the science-fiction worlds populated with artificial intelligences and robots have been coming ever closer to realisation. This year alone has seen Google buy the successful artificial intelligence startup DeepMind for more than $400million with Elon Musk, Mark Zuckerberg, and Ashton Kutcher investing more than $40million in Vicarious FPC.

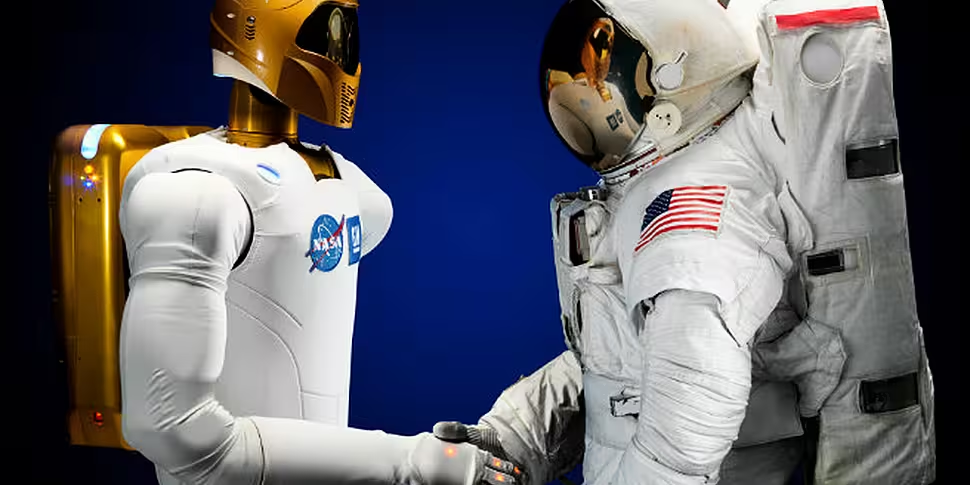

Though we have yet to build a machine that can pass the Turing Test the advances in computer engineering, programming, and associated fields coupled with our increased understanding of how the brain works have been bringing the possibility of a computer that can consciously learn and understand ever closer to being realised. The advent of such an artificial being with intelligence comparable with, or far surpassing, the human intellect does, however, raise some serious moral and philosophical dilemmas that have not been overlooked.

In 1942 Isaac Asimov formalised his Three Laws of Robotics, which have become one of the most influential and defining concepts in science-fiction writing. Designed to ensure a world where robots improve the lives of humans without posing a risk to them this plot device reflected the scar slavery left on the psyche of America and the world. This use of artificial robots and otherworldly others as allegories for real conflicts and issues was a practice employed by many science-fiction writers.

The continuing technological revolution and rise of the computer, however, saw many thinkers begin to address the potential problems that would arise with the creation of an artificial intelligence. These issues varied depending on the author with different minds imagining worlds where machine was enslaved to man, man to machine, or the two at constant war with each other; even when cohabitation was imagined it was rarely, if ever, utopian.

One of the main themes running throughout science-fiction does, however, seem to be the incompatibility of human and machine societies. This trend has been seen by many as an indictment of human nature and our historic inability to respect and coexist with other intelligent beings, including members of our own species. It has, however, also been driven by our fear of how an artificial intelligent would react to being brought into being. Would our creations, unbound by natural ties and evolved morals, turn against us? Is machine compatible with man?

Listen back as Patrick and a panel of experts look back on the history of artificial intelligence and try to divine the future of this field from the footsteps already trod. Is the creation of an artificial intelligence possible? If so why haven’t we seen one yet? And what will it mean if we ever do create a machine capable of learning and having intelligence comparable with, or surpassing, our own?